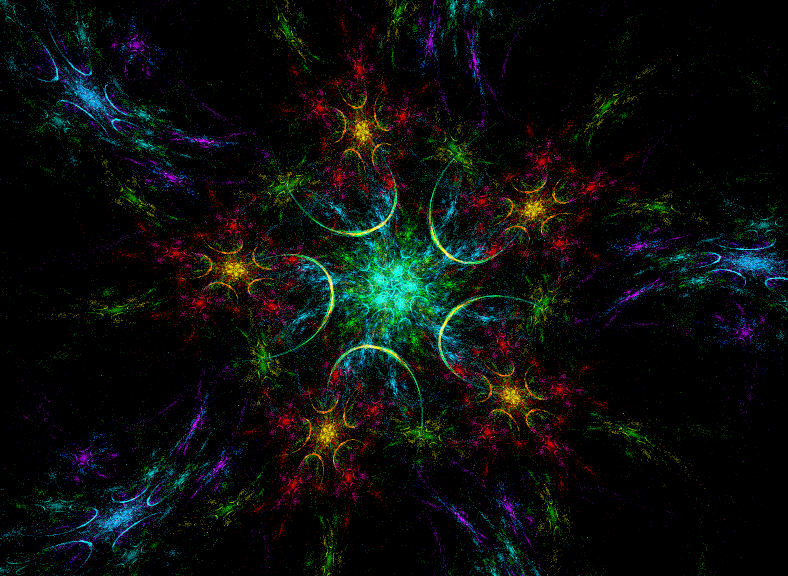

fl4m6e proof of concept

The last few days I've been hacking on a little OpenGL fractal flame renderer. The main concept behind fl4m6e is to compute everything on the massively parallel graphics card GPU, instead of a single CPU core as used in flam3. On a sufficiently fast GPU, it should be possible to render video in real time (depending on the quality settings, my 5 year old card varies between 10 frames per second and 10 seconds per frame). Techniques used include:

- texture to texture rendering loop with fragment shader to compute the iterated function system (monte-carlo style)

- texture to vertex buffer rendering to get the final points and colours

- vertex array rendering into buffer texture to accumulate the histogram

- colour correction shader to generate the final image

Eventually I aim to port it from C to Haskell, and do some fancy stuff with generating appropriate GLSL shader source at runtime from XML descriptions (because GLSL shaders have a hardware-limited size/complexity, it's not feasible to have just one massive shader with switchable options). It won't ever be a drop in replacement for flam3, not least because OpenGL offers very weak guarantees about image equivalence (or whatever the technical term is..) - the same code might generate slightly (or not so slightly) different images on different graphics cards, which would for example be rather detrimental to the distributed rendering techniques of the Electric Sheep screensaver - but given the realtime rendering goal of fl4m6e, it might be more advantageous to have a peer-to-peer network sharing interesting genomes rather than a server distributing video files (I still can't get over the 88TB/month bandwidth figure quoted on their blog).

(Rather uncommented) source code is available under AGPL3+ license, but be warned it's changing rapidly, and some versions will spew out binary image data on stdout, so read through before trying it out!

svn checkout https://code.goto10.org/svn/maximus/2009/fl4m6e