Optimizing weights for iterated function systems

An iterated function system (IFS) is collection of geometric transformations. If the functions are contractive, then the system has a fixed point obtainable by iterating a point through all the functions by choosing one at random at each step and plotting all the points you travel through. This is called the chaos game algorithm for plotting (there are others, another is multi copy reduction machine). Often the resulting shape is a fractal.

To go further, if you count the number of times you plot each point, the shape approaches a multifractal distribution. Varying the probabilities of each function changes the distribution, but not the shape (apart from if the probability is exactly 0). An iterated function system with probabilities (IFSP) has one probability for each transformation.

An extension of IFS called graph directed iterated function systems (GDIFS) makes each transformation into a node in a graph, which is more general because some transitions between nodes can be forbidden (by not having a corresponding edge). Naturally probabilities can be added to GDIFS to get GDIFSP, and now there is a matrix of probability weights with one value for each possible edge between nodes (if there is no edge, the probability is 0). The graph directs the IFS, and to overload terminology further the edges have a direction (so you can have A to B without B to A) which makes it a directed graph.

This all started when someone in Fractal Chats was trying to "balance" one of their fractal artworks. I found a Maths Stack Exchange question and answer that said for an IFSP of similitudes the optimal probability weights are related to the contraction ratios and the fractal dimension: How to optimally adjust the probabilities for the random IFS algorithm?. One of the comments on the question references a paper with a proof via multifractal spectrum:

A Multifractal Analysis of IFSP Invariant Measures with Application to Fractal Image Generation

J. M. GUTIÉRREZ, A. IGLESIAS and M. A. RODRÍGUEZ

https://doi.org/10.1142/S0218348X96000042Abstract

In this paper, we focus on invariant measures arising from Iterated Function System with Probabilities (IFSP). We show the equivalence between an IFSP and a linear dynamical system driven by a white noise. Then, we use a multifractal analysis to obtain scaling properties of the resulting invariant measures, working within the framework of dynamical systems. Finally, as an application to fractal image generation, we show how this analysis can be used to obtain the most efficient choice for the probabilities to render the attractor of an IFS by applying the probabilistic algorithm known as “chaos game”.

The maths required to extend this to GDIFSP of non-linear functions is way beyond me (the paper only proves things for similarities, and affine functions are optimized numerically afaict), and I didn't fancy the maths involved for multifractal spectrum (this time), so I implemented a numerical algorithm that is conceptually quite simple: when plotting each point in the chaos game, compare the current location's plot count with the average plot count (averaged over non-empty locations) and adjust the weight of the current transformation according to if it is too dense or not dense enough. Works for any transformations and for both IFSP and GDIFSP

This average can be calculated efficiently by keeping track of the total count of plotted points, and the count of empty locations (start with this full, and decrement when incrementing a location from 0). This algorithm requires the histogram of plotting locations to cover the whole limit set, for the non-linear Moebius transformations I used the Riemann sphere (complex plane plus infinity) modelled as two unit discs using complex reciprocal for the one nearer infinity (equirectangular projection would probably also work, as used in 360 video, or even a cube map, as used in 3D rendering like OpenGL).

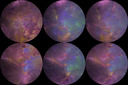

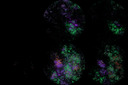

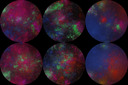

Here is a collection of images of iterated function system fractals of randomly generated Moebius transformations, with (from left to right in each image) random weights, uniform weights, and weights optimized by my algorithm:

You can hopefully see that the right hand side is flatter / less dynamic, and the sparser fractals are more filled out.

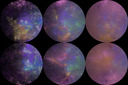

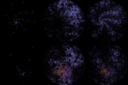

Here are the same transformations optimized as GDIFSP, ie with a matrix of weights rather than a vector:

Whether this is actually useful in practice remains to be seen, but you can find the code in my fractal-bits repository, subdirectory autoxaos:

git clone https://code.mathr.co.uk/fractal-bits.git cd fractal-bits/autoxaos